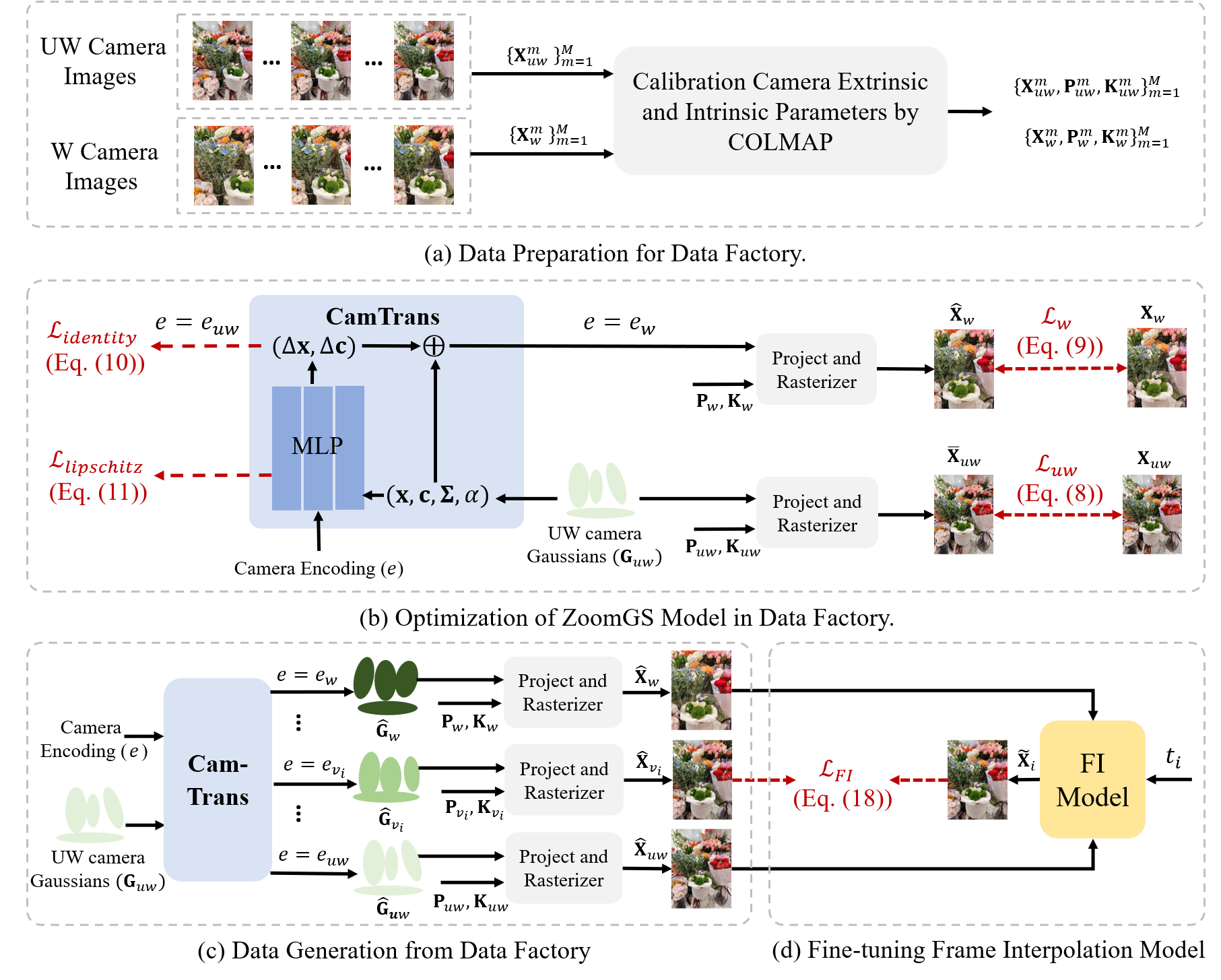

DCSZ Overview

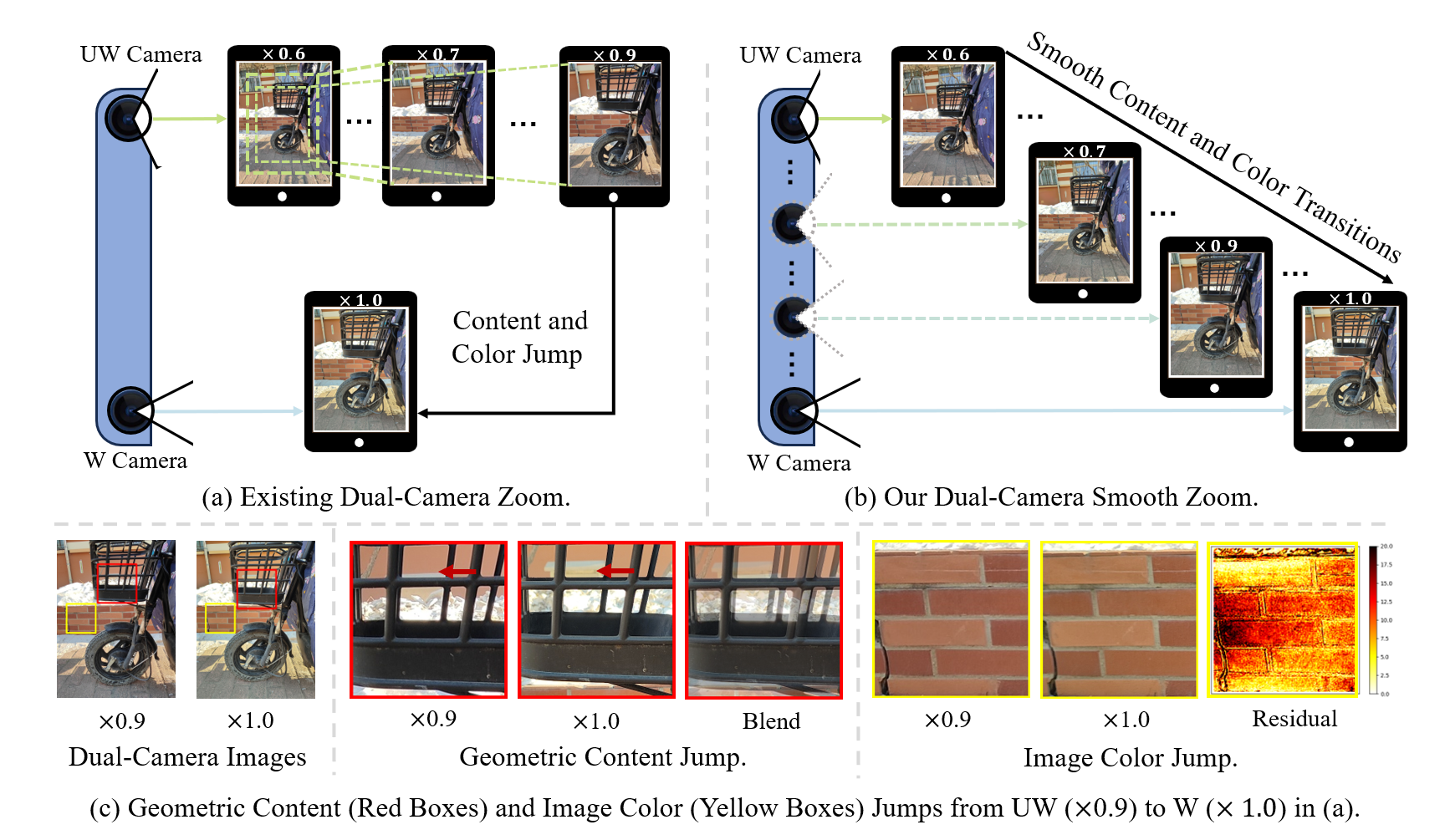

(a) Existing dual-camera zoom. For zooming between UW and W cameras (i.e., from x0.6 to x1.0), smartphones (e.g., Xiaomi, OPPO, and vivo) generally crop out the specific area from the UW image, and scale the image up to the dimensions of the original. When the zoom factor changes from 0.9 to 1.0, the lens has to switch from UW to W, where notable geometric content and image color jump happen in the preview. (b) Our proposed dual-camera smooth zoom (DCSZ). (c) The geometric content and image color jump in (a) existing dual-camera zoom. Some examples are shown below.

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|